Stationary Markov Chains

Note that for the gambler’s ruin problem, . This is an example of a case where the one-step transition function is the same for any value of .

Recall that for a general markov chain, we would have to know the transition matrix for every value of . But what if the transition matrix were to be independent of ? That would simplify our specification too.

Stationary Transition Probability: A Markov Chain with the state space is said to have stationary transition probability if:

It means that the one-step transition probability does not change with .

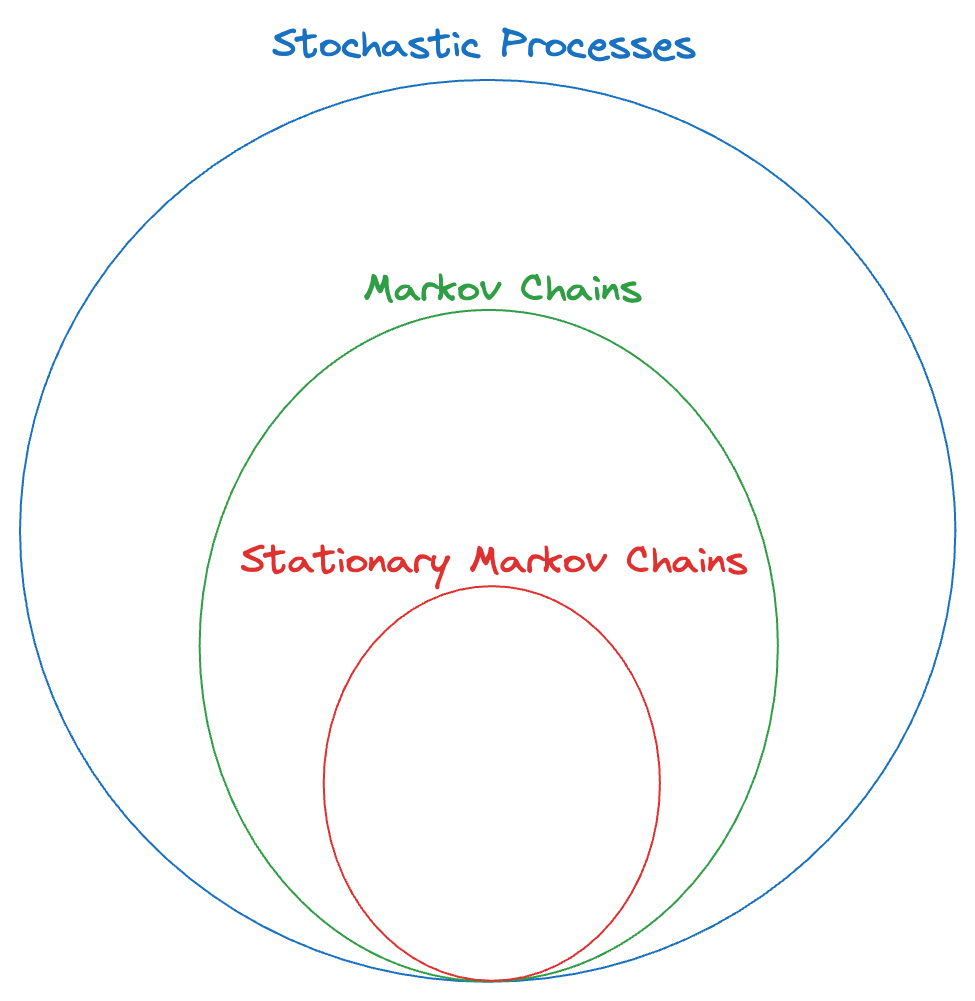

With each additonal constraint on the “general stochastic process”, we are trying to simplify the process (i.e., reduce the complexity) so it is easier to understand and analyze the process.

In the case of stationary markov chains, we only need and (a single one-step transition matrix that is valid for all times) to completely specify the MC.

Notation: For a stationary MC, we can drop the superscripts on the transtion probabilty matrix since it is stationary (i.e., the "same").

What about the -step transition probability matrix? We have (and this is the Chapman-Komlogorov Equation for stationary MC) :

In other words, we denote the -step transition probability matrix by (and is valid for the transition for any value of , for a fixed ). And that matrix is equal to (the original one-step transition matrix raised to the power of ). This follows from the definition of the transition matrix and should be quite intuitive.

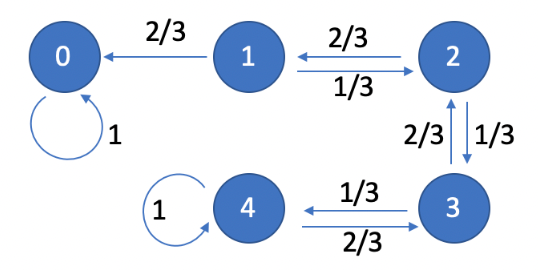

When we only have a finite number of states, we can also use a diagram (think: directed graph) to represent the stationary markov chain. Here, the nodes are the states themselves and the "weight" of each edge is the probability associated with the transition between the states. We omit those edges where the probability of the transition is zero.

Example: Consider the gambler’s ruin problem. Suppose Alice starts with dollars, and the probability of winning each round is , then we can draw the following state-transition diagram:

Notice that we only draw the one-step transitions, NOT all of them. This is sufficient to completely specify the stationary MC.

Why can’t we use such a diagram for a general MC (not necessarily stationary)? Because the probabilities of transitions between the states could change, depending on the value of . So, we would need to draw such a state diagram for every value of (possibly infinite).

For a state diagram, the sum of the weights of all outgoing edges MUST be (since it represents a probability distribution → all possible transitions from that state). The condition does not have to hold for all incoming edges of a node (since that doesn’t really mean much).

Of course, there is a one-to-one mapping between the one-step transition matrix and the state diagram. One can be derived from the other.

Tip: drawing the diagram is a good way to visualize the process + identify the “absorption” states and whether the system is “stable” or not, i.e., will the process ever terminate or not. (for e.g. in case of the gambler’s ruin, is there a non-zero probability that the game never ends and the money keeps oscillating between and → the answer is NO - because we have the probability of winning and losing from every state between and and when we sum all of them up, we get , which means there’s no “probability left over” for the infinite oscillation case).

Also, note that from now onwards, we’ll only be dealing with stationary markov chains.