Random Walk

In the previous page, we’ve derived the results for the general case of the gambler’s ruin problem. But we can go even further.

Mathematically, all that matters are the transition probabilities, the states, and the stopping conditions. In particular, the interpretation of each of these depends on the context. So, we can abstract it further away by keeping the underlying mathematics intact. That is, instead of thinking of as being the amount of money that a gambler has at time , we can think of as being a location. Then, can be the probability of going left/right (for the simple 1-dimensional case), can be the destination that the person wishes to reach. We can have the location being the “end” (maybe a cliff?) → some kind of absorption state.

Obviously, we haven’t changed the probabilistic structue or “randomness” of the process in any way → the two “processes” (general gambler’s ruin and random walk) are mathematically equivalent.

More formally, consider a stochastic process of a drunk man who takes a random step right with probability and left with probability . Note that we don’t have any stopping rule yet. Then, we can write a random walk as a summation of multiple steps.

In particular, (probability of taking a step forward and going from to ) and (probability of taking a step backward from to )

Each step can be modelled as a bernoulli random variable (but in this case, we “code” failure to be instead of so we need to change it slightly). With some cleverness, we can write it as:

Interpretating the above equation: with probabilty , we have , and with probability , we have: (which is exactly what we want).

Then, we have:

Here, is the starting location.

Observe that even is a random variable, i.e, we don’t know when we will stop the random walk. So, it can also be considered to be a “random sum” in this sense.

A special case of this is when , then we have:

This follows from the fact that random variable (assuming that all the bernoulil variables are independent and identically distributed).

This version of the random wak is NOT the same as gambler’s ruin because we don’t have any stopping rule yet. But if we go ahead and set a stopping rule such that if arrives at or , then it will stay at the corresponding state forever.

With first step analysis, we have the formulae (same as gambler’s ruin):

When , we call it a “symmetric” random walk.

The above definition is for a 1-dimenstional random walk (the person can only go forward/backward or left/right). Can we extend this to multiple dimensions?

Note that we’re still dealing with “discrete” space. It’s not continuous (i.e, you cannot move an arbitrary distance in any dimension, you need to move an integral distance). This is called “discretizing” space.

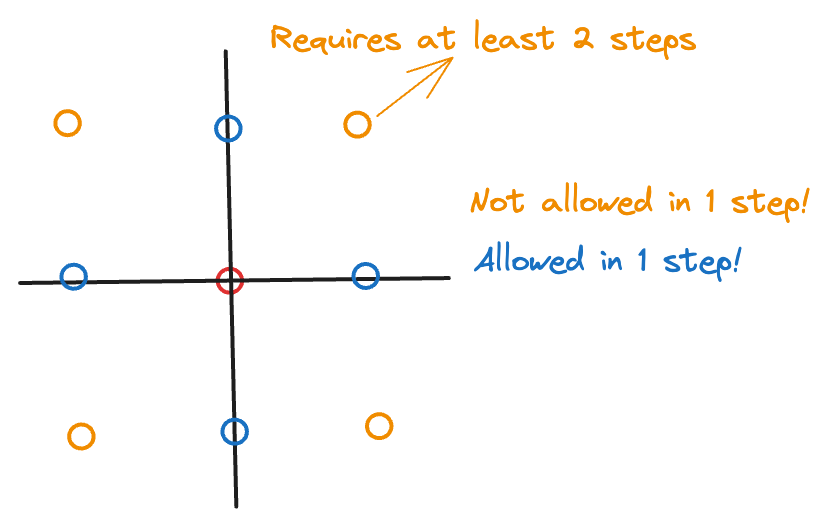

Yes, it’s not very different. There is nothing “mathematical” about the directions themselves. So, to extend it to -dimensions, we can consider the nearest neighbors (think: “top/bottom” in each of the 2 dimensions → it’s like making a binary decision for exactly one of the of the dimensions). Note that in this case, we’re only allowed to move in one direction in any step. So, we can pick a direction in possible ways and then pick either +/- along that direction in ways → hence, there are neighbours. It is NOT → this would be the case when we move along one direction in every dimension in a single step.

In case of , the diagram below illustrates which steps are allowed by our definition of random walk:

So, for the 2D random walk, any step .

For a -dimension random walk, consider . It forms a lattice. In the symmetric random walk case, each of the nearest neighbors has probability . We can denote this step as . (You can think of each step as being a “delta” tuple with zeroes and exactly non-zero value which can be . Example: In 3-dimensions, means that you move right along the -axis. means you move up along the -axis. Note that is NOT allowed in a single step → this explains why you have instead of neighbours.)

Then we have (in the same way as for a single-dimension random walk):

In fact, the probabilities need not be → they can be anything, as long as they sum to .

For such a random walk, we can also find:

- Stopping time: when arrives at a point , the walker will stop there.

- the probability that can arrive at

using first step analysis.

A famous result is:

A drunk man will find his home, but a drunk bird may get lost forever.

It comes from the result that

- For 2-dimension symmetric random walk, the probability that the walker can arrive at (maybe his home?) is (assuming an infnite 2D plane, the walker almost surely reaches his home → read this for a more mathematical definition of “almost surely” → it’s super interesting 🎊)

- For any dimension > 2, the probability that the walker arrives at is < 1.

It’s a good exercise to try and prove the above result.

We can generalize the random walk in another way, by relaxing the “discrete” constraint, to the continuous case (can be 1D, 2D, or -dimensions too).

For each step, the walker moves in time. When and , then it can describe a continuous path (we’re considering smaller and smaller intervals of time, and we expect the particle to move a very small amount in that small interval of time).

Actually when (according to statistical mechanics), the resulting process is called Brownian motion. But this is outside the scope of the class.