Solving Recurrences

Recall that one of our main aims in CS3230 is to be able to analyze algorithms. Often, we have recursive algorithms which leads to running time in the form of recurrence relations. For example, the recurrence relation for MergeSort is .

It is not easy (at least, not always) to derive the big-O or other asymptotic bounds just by looking at the recurrence relations.

So, how do we solve recurrence relations? There are 4 ways described below:

- Telescoping Method

- Recursion Tree

- Master Method

- Substitution Method

Telescoping Method

For any sequence :

In short, all the terms in the middle cancel out and only the first and last term remain. This property is very useful in simplifying such sums of series.

For example,

To split a fraction with multiple terms in the denominator, we use partial fractions.

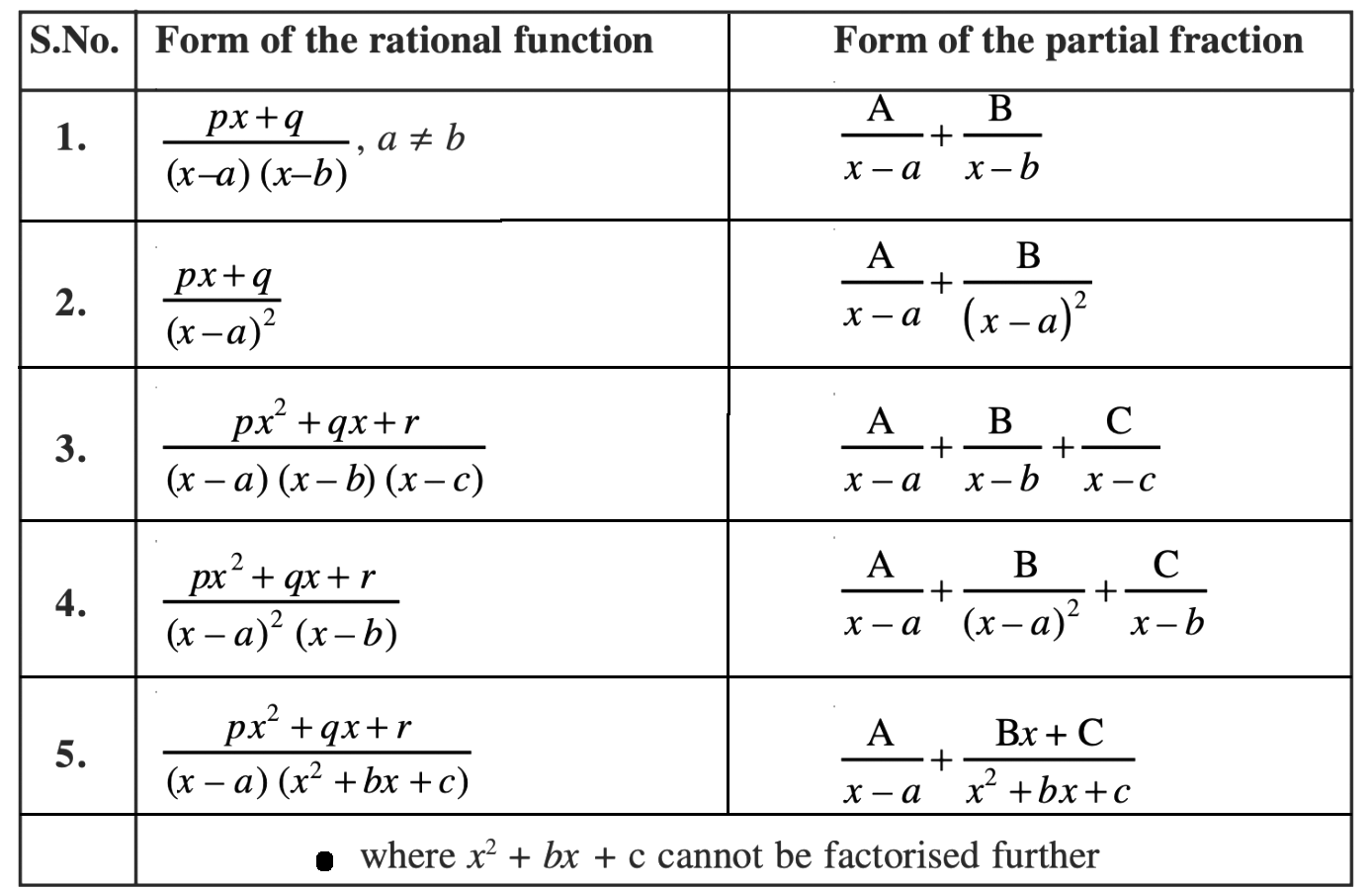

The following is a summary of the partial fractions that are good to know (you can set up the equations and solve for the constants A, B, C, etc.)

As an example, let's try to derive a bound for MergeSort using the telescoping method.

We have: (ignoring theta-notation for simplicity).

This implies,

Let’s go ahead and expand the above equation:

Notice that appears on the RHS in the first equation, and on the RHS in the second equation. Similarly for all the other middle terms. So, what happens when we add all these equations together?? These terms get cancelled out! (if it’s easier to visualize it, you can move all terms except in every equation to the LHS - keep s on RHS - and then add everything up)

We finally end up with

How many s are there? Well, how many equations are there? Since we stopped when reached , we have exactly equations. So, we have many s. That is,

Since is constant time, we get,

In fact, since we didn’t make any loose calculations/assumptions (we did not ignore a single term), we can even say since there have to be at least equations above.

Generalizing the method

Let’s try to generalize this telescoping method now.

Consider a recurrence relation:

The question is: how do we get it to be of the form that we can apply telescoping series results?? What quantity do we use to divide both sides by??

Answer: We want to express the relation as:

So, our goal is to find a that satisfies this condition.

This simplifies to finding a such that . We can take to satisfy this constraint.

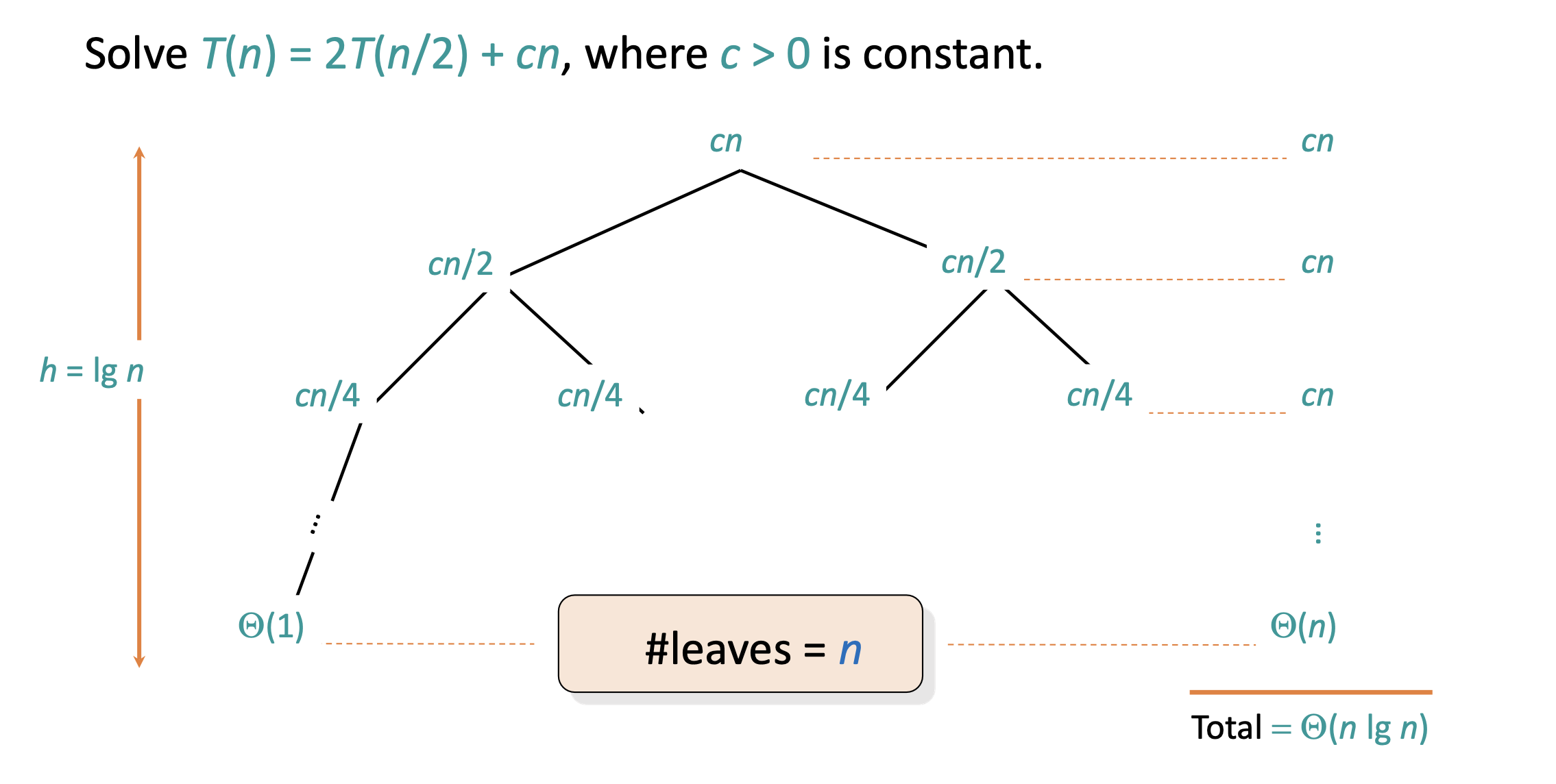

Recursion Tree

This is the most general way which can be used to solve any kind of recurrence relation.

For example,

In short, the steps to follow are:

- Draw the recursion tree by identifying how many recursive calls are made

- Calculate the work done/cost to be paid by the algorithm apart from the recursive calls. This gives the cost at each node

- Find the total cost of each level of the recursive tree

- Sum up the costs of all levels

Master Method

The master method is a (no-brainer) method to solve recurrences of a certain form. It is a direct consequence (i.e., a special case) of the recurrence tree method. Each of the 3 cases of the master method corresponds to a certain type of recursion tree (left as an exercise to figure out).

The master method applies to recurrences of the form: where , and is asymptotically positive.

There are 3 common cases of the master theorem, each involves comparing with (recall this is what we got to be for our telescoping method too! → it turns out that this is the number of leaves in the recursion tree!):

- If for some constant , i.e., grows polynomially slower than by an factor. Then,

- If for some constant , i.e, and grow at similar rates, then

- If for some constant , i.e,. grows polynomially faster than by an factor and satisfies the regularity condition that for some constant , then

Note:

- The regularity condition guarantees that the sum of subproblems is smaller than → otherwise the time complexity might be exponential - imagine if it takes longer to solve the subproblems than the main problem LOL

- For (1) and (3), being polynomially slower/faster than is a strict requirement. For example, grows polynomially slower than . But, does NOT grow polynomially slower than although because you cannot find any that satisfies the requirement.

- cannot be 0 for case 1 and 3. If this happens, then it actually belongs to case 2.

A result of (2) is that for every constant , we have . That is, a polynomial running time, no matter what the power, is always worse (faster-growing) than a logarithmic running time.

Akra-Bazzi theorem is a generalization of master theorem

Substitution Method

This is the most general method: you just “guess” the form of the solution and verify by induction.

Not recommended to be used.

Appendix

Further Details of Master Theorem

In ,

- is the amount of work done to combine the smaller sub-problems

- is the number of subproblems you are dividing into

- is the size of each smaller sub-problem

- is the amount of work done in the entire recursion tree.

- Depth of recursion tree:

- Branching factor of recursion tree:

Then, the 3 cases of master theorem can be understood as follows:

- Case 1 of Master Theorem: Most amount of work happens at the leaves (because of the number of subproblems at the lowest level) since

- Case 3 of Master Theorem: Because , amount of work done in each recursive call grows faster than the number of leaves ( is highest at the root). So, the amount of work done at the root node of the recursive tree dominates → That is why . You need to ensure regularity which means that the work done at the root dominates the amount of work done by its children. (this is not normally the case because in most divide and conquer algorithms, we want to spread the load → most work happens in the recursive call and the combination part is faster). Sometimes, work done by parent does not dominate the children

- Case 2 of Master Theorem: Both and grow at the same rate, so you cannot ignore any of them(e.g.

MergeSort) → the work done by each level of the recursion tree is about the same. Most of the time, . Then, total work done = work done at each level * depth of recursion tree.

- is called the driving function

- is the watershed function